04/21 Table Read for The Art & State of Safety Journal Club

Excerpts from “Constructing Consequences for Noncompliance: The Case of Academic Laboratories” presented by Dr. Ruthanne Huising, Emlyon Business School

Full paper can be found here: https://journals.sagepub.com/doi/full/10.1177/0002716213492633

Introduction

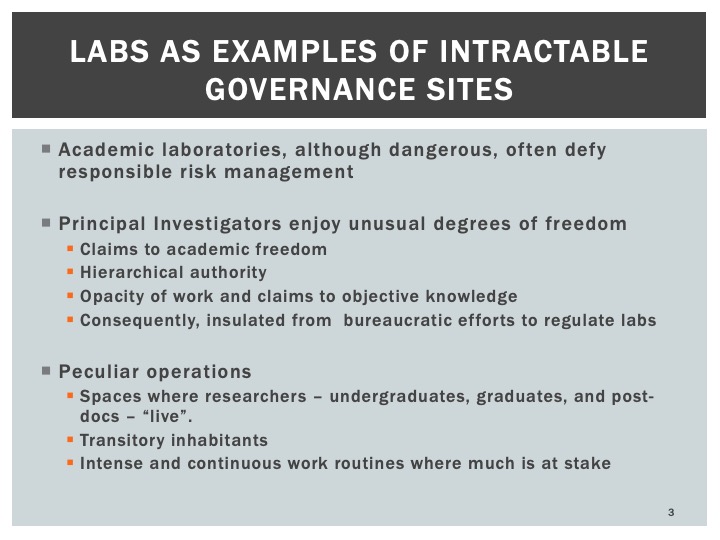

As a profession, contemporary scientists enjoy an unusual degree of autonomy and deference. Universities are professional-bureaucracies (Mintzberg 1979). One side of the organization is collegial, collectively governed, participatory, consensual, and democratic. The other side is a Weberian, hierarchical, top-down bureaucracy with descending lines of authority and increasing specialization. These organizational structures may allow for differential interpretations of and responses to legal mandates and differential experiences of regulation and self governance. They often disadvantage regulators and administrative support staff, who occupy lower-status positions with less prestige, in their efforts to monitor, manage, and constrain laboratory hazards (Gray and Silbey 2011). What is regarded as academic freedom by the faculty and university administration looks like mismanagement, if not anarchy, to regulators[a]…[b].Herein lies the gravamen of the risk management problem: the challenge of balancing academic freedom and scientific autonomy with the demand for responsibility and accountability[c][d][e][f][g][h].

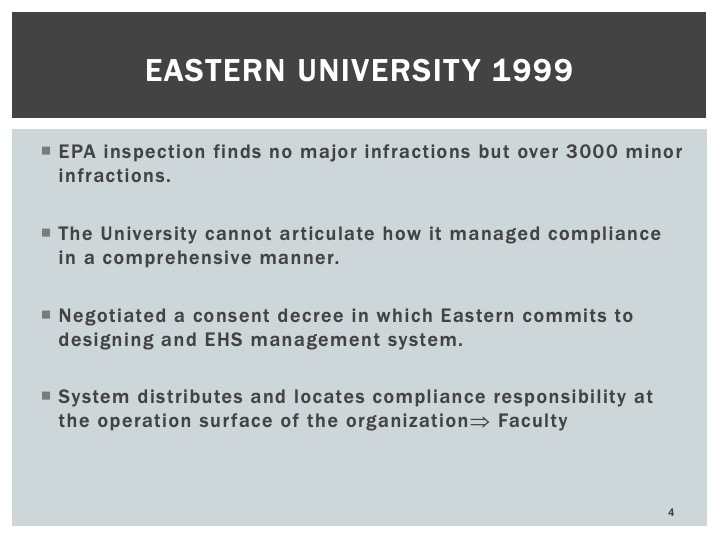

We…describe the efforts of one university, Eastern University, to create a system for managing laboratory health, safety, and environmental hazards and to transform established notions that =faculty have little obligation to be aware of administrative and legal procedures.[i][j][k] We describe the setting—Eastern University, an Environmental Protection Agency (EPA) inspection, and a negotiated agreement to design a system for managing laboratory hazards—and our research methods.

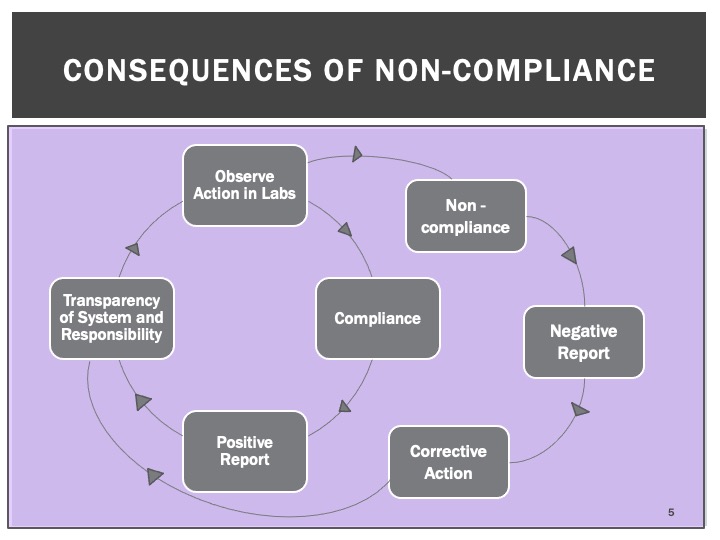

We describe efforts, through the design of the management system, to create prescribed consequences for noncompliant practices in laboratories. We show that in an effort to design a management system that communicates regulatory standards, seeks compliance with the requirements[l], and then attempts to respond and correct noncompliant action, Eastern University struggled to balance case-by-case discretion consistent with academic freedom and scientific creativity with the demands for consistent conformity, transparency, and accountability for safe laboratory practices.

Constructing Organizational Consequences at Eastern University: Management System as Solution?

During a routine inspection of Eastern University, a private research university in the eastern United States, federal EPA agents recorded more than three thousand violations of RCRA, CAA, CWA, and their implementing regulations. Despite the large number of discrete violations, both the EPA and the university regarded all but one as minor infractions. The university’s major failure, according to the EPA, was its lack of uniform practices across departments and laboratories on campus.[m][n][o][p] There was no clear, hierarchical organizational infrastructure for compliance with environmental laws, no clear delineation of roles and responsibilities, and, most importantly, no obvious modes of accountability for compliance….Without admitting any violation of law or any liability, the university agreed in a negotiated consent decree to settle the matter without a trial on any issues of fact or law.

At Eastern, the management system reconfigured the work of staff and researchers by moving compliance responsibility away from centralized specialists to researchers working at the laboratory bench. Scientists became responsible for ensuring that their daily research practices complied with city, state, and federal regulations. [q][r]This shift in responsibility was to be facilitated by the creation of operating manuals, inspection checklists, enhanced training, and new administrative support roles.[s][t][u][v][w][x][y][z][aa][ab][ac][ad][ae]

Research Methods: Observing the Design of an Environmental, Health, and Safety (EHS) Management System

From 2001 through 2007, we conducted ethnographic fieldwork at Eastern University to investigate what happens when compliance with legal regulations is pursued through a management system.

The fieldwork included observation, interviewing, and document collection. It was supplemented by data collection with standardized instruments for some observations and via several surveys of lab personnel and environmental management staff. For this article, we draw primarily from notes taken at meetings of the committee designing the system, presenting notes from the discussions concerning a catalog of consequences for poor performance required by the consent decree.

Building Responsiveness and Responsibility into an EHS-MS: Consequences for Departures from Specified Operating Procedures

The final version [of the management system manual] was agreed to only after hundreds of hours of negotiations among four basic constituencies: the academic leadership, the university attorney overseeing the consent decree, the environmental health and safety support staff located within the administration, a nonacademic hierarchy, and the lab managers and faculty within the academic hierarchy.[af][ag][ah]

These descriptions explain that each person, or role incumbent, works with a committee of faculty and staff of safety professionals that provides consultation, monitoring, and recommendations, although legal responsibility for compliance is placed entirely within the academic hierarchy, with ultimate disciplinary responsibility in a university-wide committee.

>What will constitute noncompliance?

Despite the adoption of the original distinctions between minor, moderate, and very serious incidents [described in a section not included in this Table Read], discussions continued about the relationship between these categories and the actual behavior of the scientists. How would the system’s categories of “acceptable” and “unacceptable” actions map onto normal lab behaviors? How much would the lives of the lab workers be constrained by overly restrictive criteria?[ai][aj][ak] As Professor Doty said, no one wanted the system to be like police surveillance. Labs are places where science students live, after all.[al][am] Once a basic list of unacceptable conditions and actions was created and communicated through safety training, the salient issue would be intentionality, as it is in much conventional legal discourse.

>Who will identify noncompliance?

Marsha (attorney for University): I think we’re going to need to be more specific, though, for university-wide committee policy. If the consequence of a particular action is termination from Eastern, then there’s policy in place for that, but what leads up to that? When do you shut down a lab?[an][ao] When do you require faculty to do inspections in departments like XYZ? A lot of people here have partial responsibility for things—the system may work well, but it’s not[ap] always clear who’s responsible. Where we need to end up is to remember this key link to the PI. In order for this to work, I think it really comes down to the PI accepting responsibility[aq], but how they deal with that locally is a very personal thing[ar][as][at][au]. I don’t think we should prescribe action, tell the PI how to keep untrained people out of [the] lab. But we need to convince the faculty of this responsibility.[av]

>How will those formally responsible in this now clearly delineated line of responsibility be informed?

Who will tell the professor that his or her lab is dirty or noncompliant?[aw][ax][ay][az][ba][bb][bc][bd]

Informing the responsible scientist turns out to be a complex issue at the very heart of the management system design, especially in the specifications about distribution of roles and responsibilities. In the end, Eastern’s EHS-MS named a hierarchy of responsibility, as described above, from the professor, up through the university academic hierarchy, exempting the professional support staff.[be][bf]

Despite the traceable lines of reporting and responsibility on the organizational charts, consultation, advice, and support was widely dispersed so that the enactment of responsibility and holding those responsible to account were constant challenges and remain so to this day. Most importantly, perhaps, because the faculty hold the highest status and yet hold the lowest level of accessibility and accountability, the committee was vexed as to how to get their attention about different types of violations.

Marsha: …So, while you’re defining responsibilities and consequences, make sure you don’t relieve the PI of his duties. You can assign them helpers, but they need to be responsible[bg][bh][bi]. There can be a difference between who actually does everything and who is responsible[bj]. You need to make sure people are clear about that.

Marsha: We need to convince the faculty of this responsibility….This is what we should be working on this summer. This is unfortunately the labor intensive part—we need to keep “looping back”—going to people’s offices and asking their opinions so they don’t hear things for the first time at [some committee meeting].[bk]

Marsha: We need department heads and the deans to help us with PIs in the coming months…. We need to get PIs—if we don’t get them engaged the system will fail….We will get them by pointing out all the support there is for them, but bottom line is they have to buy into taking responsibility.[bl][bm][bn][bo][bp][bq][br][bs]

In the end, the system built in three formal means to secure the faculty’s attention and acknowledgement of their responsibility for laboratory safety: (1) A registration system was implemented, in which the EHS personnel went from one faculty office to another registering the faculty and his or her lab into the system’s database. The faculty were required to sign a document attesting that they had read the list of their responsibilities and certifying that the information describing the location, hazards, and personnel in their lab was correct for entry into the database. (2) All faculty, as well as students, were required to complete safety training courses. Some are available online, some in regularly scheduled meetings, and others can be arranged for individual research groups in their own lab spaces. The required training modules vary with the hazards and procedures of the different laboratories. (3) Semiannual university inspections and periodic EPA inspections and audits were set up to provide information to faculty, as well as the university administration and staff, about the quality of compliance in the laboratories. Surveys of the faculty, students, and staff, completed during the design process and more recently, repeatedly show that familiarity with the EHS system varies widely.

Although the audit found full compliance in the form of a well-designed system, it also revealed that many of the faculty and some administrators did not have deep knowledge of it,[bt] despite the effort at participatory design.

>What action should be taken?

Consequences vary with the severity of the incident.

It was essential to the design of the system that there be discretionary responses to minor incidents, which are inevitably a part of science.

It was assumed[bu] that regular interaction with the lab safety representative, discussions in group sessions, and regular visits by the EHS coordinator would identify these and correct them on the spot with discussion and additional direction. The feedback would be routine, semiautomatic in terms of the ongoing relationships between relatively intimate colleagues in the labs and departments. No written documents would even record the transaction unless it was an official inspection; weekly self-inspections by the safety reps were not to be fed into the data system.[bv][bw][bx][by][bz][ca][cb][cc][cd][ce]

Consequences for moderately serious incidents include one or more of the following actions: oral or written warning(s) consistent with university human resources policies; a peer review of the event with recommendations for corrective action; a written plan by a supervisor [cf]that may include retraining, new protocols, approval from the department EHS committee, and a follow-up plan and inspection; or suspension of activities until the corrective plan is provided, or completed, as appropriate.

A list of eight possible consequence[cg][ch][ci]s accompanies the definition of a very serious incident. The list begins with peer review and a written plan, as in moderately serious incidents, but then includes new items: appearance before the university’s EHS committee or other relevant presidential committees to explain the situation, to present and get approval of a written plan to correct the situation, and to implement the plan; restriction of the involved person’s authority to purchase or use regulated chemical, biological, radioactive, or other materials/equipment; suspension or revocation of the laboratory facility’s authorization to operate; suspension of research and other funds to the laboratory/facility; closure of a lab or facility; and applicable university personnel actions, which may include a written warning, suspension, termination, or other action against the involved person(s) as appropriate.

These descriptions illustrate the sequential escalation of requirements and consequences and display, rather boldly we think, the effort of the committee to draft a legal code for enforcement of the management system’s requirements.

When the committee completed its work, Marsha, the lead attorney, went to work editing it. When it was returned to the committee, the changes, many of which were grammatical rather than substantive, nonetheless so offended the group that participation in the planning process ceased for a long while.[cj][ck][cl] The associate dean communicated to the EHS leadership that morale among the coordinators and other committee members from the laboratories was low and that their willingness to do their best was being compromised. They believed that the decisions they made collectively in the working meetings were being undermined and changed so that at subsequent meetings, documents did not read as they were drafted; they believed that crucial “subtleties, complexities and nuances to policies and proposals” were being ignored, if not actively erased. If they were to continue working together, they asked for complete minutes and officially recorded votes.

Nonetheless, it was the scientists’ and their representatives’ fear that the system would in fact become what a system is designed to be: self-observant and responsive and, thus, would eventually and automatically escalate what were momentary and minor actions into moderate, if not severe, incidents. This anxiety animated the planning committee’s discussions, feeding the desire to insert qualifications and guidelines to create officially sanctioned room for discretionary interpretation.

>Who will be responsible for taking action to correct the noncompliant incident?

Clearly, most minor incidents are to be handled in situ, when observed, through informal conversation, and the noncompliant action is supposed to be corrected by the observer’s instruction and the lab worker’s revised action. Some noncompliance is discovered through inspections that inform the PI of noncompliant incidents; a follow-up inspection confirms that the PI instructed her students to change their ways. Very few incidents actually move up the pyramid of seriousness.

A significant proportion of the chronically reported incidents are associated with the physical facilities and materials in the laboratories[cm], such as broken sashes on the hoods, eye washes not working or absent, missing signage, inadequate tagging on waste, empty first aid kits, or crowding—simply not enough benches or storage areas for the number of people and materials in the lab…Corrections are not always straightforward or easy to achieve.[cn] Tagging of waste, proper signage, and adequate first aid kits may be fixed within a few minutes by ordering new tags and signs from the EHS office and a first aid kit through the standard purchasing process. While the lab may order its own supplies, it must wait for the EHS office to respond with the tags and signs. The hood sashes and eye wash repairs depend on the university facilities office, which is notoriously behind in its work and thus appears unresponsive. In nearly every conversation about how to respond to failed inspections, discussion turned to the problems with facilities (cf. Lyneis 2012[co])…crowding is often the consequence of more research funding than actual space: the scientist hires more students and technicians than there are lab benches. This has been a chronic issue for many universities, with lab construction lagging behind the expansion of research funding over the last 20 years.

Just as the staff experienced the faculty as uninterested in the management system, the scientists experienced a “Don’t bother me” attitude in the staff, because often the ability to take corrective action does not rest entirely with the persons formally responsible for the lab.[cp][cq][cr][cs][ct] The PI depends on the extended network of roles and responsibilities across the university to sustain a compliant laboratory. This gap between agency (the ability to perform the corrective action) and accountability (being held responsible and liable for action) characterizes the scientists’ experience of what they perceive as the staff’s attitude of “Don’t bother me.” The management system is, after all, a set of documents, not a substitute for human behavior.

Discussion and Conclusion

In this article, we have used the case Eastern University to show how coordination and knowledge problems embedded in complex organizations such as academic research laboratories create intractable regulatory and governance issues and, unfortunately, sometimes lead to serious or even deadly outcomes. Overlaying bureaucratic procedures on spaces and actors lacking a sense of accountability to norms that may in real or perceived terms interfere with their productivity highlights the central challenge in any regulatory system: to balance autonomy and expertise with responsibility and accountability. Under these conditions, accountability may be, in the end, illusory.

…Rather than an automatically self-correcting system of strictly codified practices, Eastern’s EHS-MS relies on case-by-case discretion that values situational variation and accommodation. Compromises between conformity and autonomy produce a system that formally acknowledges large and legitimate spaces for discretionary interpretation while recognizing the importance of relatively consistent case criteria and high environmental, health, and safety standards. [cu][cv][cw][cx]Marsha, Eastern’s principal attorney, noted the difficulties of balancing standardized ways of working in high-autonomy settings, voicing concern about “the exceptions [that] gobble up the rule.” The logic of the common law is reproduced in the EHS-MS because, like our common law, only some cases become known and part of the formal legal record: those that are contested, litigated, and go to appeal. In this way, the formal system creates a case law of only the most unusual incidents while the routine exceptions gobble up the rule.

…safer practices and self-correcting reforms are produced by surrounding the pocket of recalcitrant actors who occupy the ground level of responsibility with layers of supportive agents who monitor, investigate, and respond to noncompliant incidents. In the end, we describe not an automatic feedback loop but a system that depends on the human relationships that constitute the system’s links.[cy][cz][da]

Group Comments

[a]Interesting set of different perspectives…

[b]The quote “They spent all this time wondering if they could, that no one thought to think about if they should” is the first thing that comes to mind when I read this sentence.

[c]Why are these viewed as diametrically opposed? They can be complimentary

[d]In practice, have you found this to be the case? I find this perspective interesting, because at least in my experiences and the experiences of those I’ve interacted with, practically, they do often conflict (or, at least are *perceived* as conflicting, which really may be all that matters, culturally)

[e]In many of the issues I have explored as a grad student, I have noticed that this “freedom” often translates into no one actually being responsible for things for which someone really SHOULD be responsible. And if faculty step up to take responsibility, they are often taking on that responsibility alone.

[f]I think it’s strongly dependent on awareness (often via required training) and leadership expectations. In instances where both were sound and established, I’ve seen these elements to be complimentary.

[g]I wonder what this training would look like. In my experience, a lot of training is disregarded as an administrative hoop to jump through every once in a while. I also think it’s wildly dependent on the culture of the university, as it exists. There is often little recourse to leverage over faculty to modify their behavior if it’s not (1) hired in, (2) positively incentivized, or (3) socially demanded by faculty peers. It seems difficult to me to try to newly instill such training requirements, with the goal of making PIs aware of their responsibility for ensuring safety. If there are no consequences (short of a major accident drawing the eye of the law), no social pressure to engage in safe behavior, and no positive incentive structure to award participation, why would faculty change their behavior? Many of them are already aware of the safety requirements—many of them just choose to prefer short term productivity and to prioritize other metrics. In an ideal world, I think I would agree with you that training at the University level would be sufficient, but I think there needs to be a much broader discussion of faculty *motivation*, not just their awareness.

[h]Agreed, the culture/environment aspects are huge in terms of how such awareness training is received. There’s multiple incentive models, and I’d hope that legal liability isn’t the only one that would lead to proactive action.

[i]Sounds like a training deficiency if that’s the perception.

[j]What are you defining as “training”?

[k]Awareness of their obligations/requirements for both administrative (university) and regulatory elements. This should be provided by the institution.

[l]which should include process safety

[m]This is a bit vague. Uniform practices? A one size fits all approach?

[n]This caught me out a bit as well. If all they were finding were minor infractions, do we actually have a problem here?

[o]I’m curious what these “minor infractions” were, though. What’s the scale? What’s the difference between a major and minor infraction? 3,000 opportunities for individual chemical exposures or needle pricks may be considered small when it comes to the EPA, but it seems quite substantial when it comes to individual health and safety

[p]In general, minor infractions involve things like labellng of waste containers, storage times in accumulatins areas, etc. without any physical resulting impacts. EPA writes these up and can fine for them, but infractions don’t involve physical damange

[q]Interesting…. this is what caused problems for us at Texas Tech before our accident. Individual oversight often meant no oversight…

[r]Agreed, some form of checks-and-balances should be implemented to verify elements are being completed.

[s]In my experience this is often a techniques employed by higher administration to shift the blame on frontline researchers and their supervisors.

Combine with an underfunded EHS department and this situation results in no oversight or enforcement of these requirements.

[t]But administrators are not experts as the PIs claim they are, If PI’s want freedom and recognition as experts then they do need to be held accountable but EMPOWERED by providing support mechanisms. Responsibility without empowerment is useless.

[u]My eyes immediately went to the “new admin support roles.” If you have someone who understands both the regulations and the relationships in the department, I would think that person would be more effective than someone who shows up from a different department once per year with a checklist.

[v]Agree with Anonymous. If you want the freedom, you take on the responsibility. Don’t want the responsibility, hand over the freedom.

[w]I agree that faculty should be responsible. The common arguments I hear is that faculty aren’t in the lab all the time and can’t always be responsible for what happens day to day. Sort of a cynical view that says that faculty are the idea people and others (students?) should be the implementers…

[x]I don’t think anyone expects them to be responsible for everything every single day though. I think the idea is that they should be responsible for setting the tone in their lab and having standards for the graduate researchers working in their labs – and they should be making an effort to visit their labs in order to walk around and make sure everything is operating as it should.

[y]@Jessica I agree, but if they aren’t overseeing the day-to-day elements, they need to assign that responsibility to someone and make that assignment known to the research group. AND they need to support and empower that individual.

[z]I think I agree with Jessica here. Perhaps, someone with an eye and responsibility for safety NOT being in the lab on the day-to-day is part of the problem. maybe it *should* be a responsibility of PIs to visit their labs, to organize them, to keep up safety standards, inventory, etc. Or, perhaps it is their responsibility to hire someone to do this, specifically. Perhaps these responsibilities should be traded for teaching responsibilities, and thus institutions with high research focus can focus on hiring research teachers and managers (PIs) who are trained as such, and teachers who are actually trained as teachers.

[aa]In the 1970’s and 80’s, externally funded PIs would hire people to do this kind of stuff (often called “lab wives”) but funding for this function was shifted to additional student support

[ab]Blending what Sara and anonymous said while student support has gone up, it has also become more transactional in that it is more linked to teaching duties, while research assistantships tend to be the exception in many “research heavy universities”.

[ac]I think the responsibility of the PI should be first to open the door for safety related discussions amongst the group, and then to make the final decision on acceptable behavior if consensus is not achieved. Following that, they should bare the responsibility of any ramifications of that decision. I think they can achieve awareness of what is happening in their lab without being there every day, but they need to continuously allow the researchers to voice their concerns

[ad]I also think that PI’s might need tools to help them be accountable. Particularly new faculty

[ae]This the unfortunate part of interdepartmental politics, how far is the new faculty wiling to speak up, when in 5 years the same older faculty members will be a part of their tenure decision.

[af]This goes to early commentary on the shifting of regulatory compliance to researchers: were any researchers involved in these discussions or were PIs/lab managers speaking for them in these discussions?

[ag]I’d hope some (if they existed at this institution) laboratory safety officers were participants.

[ah]Lab safety officers were often active participants, but often on a parallel track to the faculty level discussions. I guess which group carried more clout in the system design?

[ai]The wording of this question seems to imply that lab safety impedes on lab productivity

[aj]Building upon this, there is evidence that when safety concerns are not an issue (due to correct practices) productivity is actually better.

[ak]I don’t have evidence for this, but I think it depends on how prevalent compliance is. If everyone is being safe in their labs then I think overall productivity would go up. If some people start cutting corners then while they may get short term improvement in productivity, in the long term everybody suffers (evacuations and accident investigation halting research, bad laboratory practices accumulating, etc.)

[al]Does this imply that “students” are a class of people whose rights and responsibilities are different from other people in the laboratory?

[am]Or to put it another way – why are students living in their labs?

[an]One of the EHS professionals involved in these discussions told me “When you shut down one laboratory you have a mad faculty member; when you shut down a second, you have an environmental management system.”

[ao]Faculty do notice when their colleague’s labs are shut down…

[ap]In what way is the system “working well”? What mission is being served by the way the origional system was structured?

[aq]for compliance in their lab

[ar]This seems contradictory to earlier comments that researchers are responsible for their own compliance

[as]The government does not believe this. They believe that the president of the institution is responsible for instituional compliance. The president of the institution may or may not believe that

[at]However, since the presidents turn over much more quickly than faculty, faculty often outwait upper admin interest in this issue

[au]I hear this a lot, but then I have to wonder what the word “responsibility” means in this context. The president of my university has never been to my lab, so how would he be responsible for it?

[av]The subtext I see here is that it would be awfully expensive to have the enough staff to do this

[aw]A ‘dish best served’ by faculty peers rather than university admin staff or legal.

[ax]I don’t believe faculty will have these conversations with each other due to politics. We can have someone they respect make assessments by doing a myriad of approaches including having industry people come visit.

[ay]Or in other words, who is willing to break be the bearer of bad news?

[az]I like this. When I have discussed having issues in my own lab with others outside of my institution, so many respond with “tell the head of the department.” And I’m surprised that they don’t seem to realize that this is fraught with issues – this person’s labs are right across the hall from mine – and this person visits his lab far more often – and this person has seen my lab – he already knows what is going on and has already chosen to “not see it.” Now what?

[ba]Building upon more that “head of department” does not actually mean higher in the hierarchy of authority. These people are still colleagues at the same level of authority most of the time.

[bb]I remember hearing during the research process for the NAS report on Academic Lab Safety, that Stanford Chem had an established structure for true peer inspections of other faculty spaces and in some instances risk assessment of new research efforts. From what I recall that was successful and implemented as that was the expectation. So maybe it just needs to be the expectation, rather than optional. Another alternative is you staff the administrative side with folks that have research experience, then the message may be better received.

[bc]I really like that idea – that faculty would be engaged in the risk assessment of new research efforts. You are right that it would have to be established as a norm at the university – not as optional work – or work that goes to a committee that virtually no one is on.

[bd]The biosafety world is run by a faculty involved oversight committee for grant proposals, for historical funding reasons. My experience is faculty are very reluctant to approach this process critically as peer review, but it does put biosafety issues on the agenda of the PI writing the grant

[be]Interesting that this group is left out. As an EHS employee I see an opportunity to be the consistency and impartiality across departments. Also can disseminate best practices that are implemented in some labs

[bf]Absolutely, and serve as a valuable mechanism for knowledge transfer.

[bg]Yes, delegate task responsibility but not ultimate liability.

[bh]Yes, we have a form we have for the delegation of tasks to the Lab Safety Coordinator (LSC). Ultimate responsibility at PI level, but allow them to delegate tasks using this form.

[bi]That is great that its formally documented!

[bj]Lawyers believe this. Safety professionals not so much.

[bk]Should this responsibility be part of the on boarding process for new laboratory workers in general? I would note that “Eastern U” has had problem with grad student and postdoc misbehavior in the lab, including criminal acts against lab mates. These are handled by police rather than EHS, but EHS is often involved in assessing the degree of the problem.

[bl]Herein lies one of the problems with the unorganized academic hierarchy where PI’s fall into. While systems that improve safety should always attempt to be non-punitive, at the end of the day the repeat offenders still have the freedom to not comply.

This can become problematic if that particular faculty member has a more influential role and position in their department.

[bm]I agree with you that in this case study faculty may have the freedom not to comply until the situation escalates. It is not the case that this is always true. Some universities have the authority to shut down labs. There may be a mad faculty member, but it is a powerful statement to the rest of the faculty to get their acts together.

[bn]The thing about this, thought, is that shutting down a lab is very nearly the “nuclear” option. I would imagine it would be incredibly problematic to determine who deserves to have their lab shut down and who doesn’t. And what has to occur before the lab is allowed to reopen.

[bo]The other problem this presents is the impact of a lab closure on “innocent” grad students in the lab and colloborators with the lab, both on campus and externally. These factors can make for a very confusing conversation with PIs, chairs and deans, which I’ve had more than once

[bp]In my experience, the only time admin and safety committees have even considered lab shutdown is when there’s outright defiance of the expectations and no effort made to resolve identified safety and compliance issues. I’m not sure I’d considered those criteria as being a ‘nuclear’ option, seems more like enforced accountability IMHO.

[bq]I feel like what you just described is what I meant by “nuclear” option. Their doesn’t seem to be anything between “innocuous notice” and “lab shutdown.”

[br]Agree on the point as well about graduate students being the ones who actually “pay” in a lab shutdown. If a faculty member is tenured, then they are getting their paycheck and not losing their job while their lab is shutdown. However, it directly harms the graduate students in no uncertain terms.

[bs]@Jessica Then it sounds like the institution lacks some form of progressive discipline/resolution structure if there’s only one of two options. Sadly some of that (progressive discipline structure) needs to be created with the involvement of HR to ensure labor laws and bargaining unit contracts aren’t violated. But there absolutely needs to be a spectrum of progression of options before lab shutdown is all thats left. And yes, I agree that the graduate student(s) bear a disproportionate penalty at times in the event of a lab shutdown.

[bt]Is this after having the faculty sign on to the program through the registration process?

[bu]I would say “hoped” here rather than “assumed”

[bv]Why no documentation of the informal interactions/feedback? Or was it optional? From a regulators perspective, if it isn’t documented it never happened.

[bw]Good point. The accountability system could take the informality into account but if a lab racks up a bunch of minor, informal infractions it is probably indicative of culture.

[bx]I also worry that not having it documented could lead to the ability for the feedback to be “forgotten” or denied as to having happened in the future if a larger infraction occurs.

[by]This is something that was discussed as problematic in the paper. If it is undocumented, no one knows just how many warnings an individual has had. It is also one of the problems with solely relying on researchers watching out for each other. You don’t know how many times a convo was had and weather the issue was fixed OR the person just got tired of correctly their colleague.

[bz]We are trying a pilot program using this approach. A EH&S building sweep to build a relationships with labs and let them know we don’t just visit to document non-compliance. We are not sure what we’ll document, since these are friendly visits.

[ca]Have you read Rosso’s paper about the SPYDR program they do at BMS? I thought it was a very interesting approach that could be adapted to the academic environment.

[cb]https://drive.google.com/file/d/1oCu1q6xqc12PpArTaDQ3lmlIvk-zpFbk/view?usp=sharing

[cc]Thank you Jessica!

[cd]What I particularly like about the feel of this approach is that they are having management intentionally visit labs in order to ASK THE RESEARCHERS what they feel like the problems are. This speaks to me because, as a graduate student, I was frustrated with EHS inspections in which they were focused on their checklist and minor infractions that didn’t matter while they walked right past really problematic things that were not on the checklist – and I would’ve much rather been encouraged to discuss those issues!

[ce]@Jessica Great point about inspectors being too ‘tunnel visioned’ on their compliance checklist and not able to be truly receptive to bigger issues, whether observed or vocalized during (collaborative) discussion with the research group members.

[cf]Is this a supervisor of the lab or to the lab?

[cg]There was a PhD dissertation at “Eastern U” that described how this was negotiated and the impact of those negotiations on the design of the computerized database that was used to implement the system. It’s a fascinating story to read.

[ch]Are you able to quickly find a link to share here?

[ci]That would be great to see!

[cj]This seems very strange to me. Was any additional information provided about the substantive changes that were made that could’ve potentially justified this type of response?

[ck]In working with the EPA, an agreement we were working on was almost scuttled by too many commas in a key sentence. It took 6 months to resolve it because sets of lawyers were convinced that those commas changed the meeting entirely. I couldn’t see the difference myself

[cl]The way through this was for the “clients” (techmical people from the school and the EPA) to get together without the lawyers in the room and come to a mutual understanding and then tell the lawyers to knock it off

[cm]Hardly fair to hold PIs accountable but give the university a pass on providing a safe work place. Although since this is outside the “academic freedom” morass it should be easier to address

[cn]Or are expensive and inappropriate for the PI to do.

[co]I have some of the same problems with facilities

[cp]I am disappointed that this is not a bigger part of this paper. Faculty are often characterized as “not caring” when I think the situation is much more complex than that. As a graduate student trying to get problems fixed, I can certainly attest to how difficult this is – even to know who to go to, who is supposed to be paying for it, who is supposed to be doing the work – and while I am chasing all of that, I am not getting my research done. It can be atrocious to try to get responsiveness within the system – and I can see why it would be viewed as pointless to chase by researchers at least at some institutions.

[cq]As an EHS staff member I can see a cultural rift between the groups. Comes down to good leadership at the top which is in short supply. Faculty and staff all work for the same university…

[cr]@Jessica I agree that getting resolution on infrastructure issues as a graduate student can be a huge time sink and at times even ineffective. That’s where having an ally/collaborator from the professional staff or EHS groups can be invaluable. They often know the structure and can help guide said efforts.

[cs]I would be interested in what percentage of the faculty had this attitude. In my experience, it represents about 20% of an institutional faculty population; 20% of the faculty are proactive in seeking EHS help; and the remaining 60% are willing to go with the departmental flow with regards to safety culture.

[ct]” Faculty and staff all work for the same university…” and work on the same mission, although in very different ways.

Another challenge is that many faculty don’t have a lot of identification with their host institution and often perceinve they need to change their schools in order to improve their lab’s resources or their personal standing in the hierachy

[cu]And if we don’t have experts in actual scientific application looking at the problems or identifying problems then the system is broken. A lab can look “clean and safe” but be filled with hazards due to processes. I believe a two tier audit system needs to be in place: First tier compliance Second tier: Safety in lab processes

[cv]YES! I have often been frustrated when having discussions in the “safety sphere” on these issues. By coming at it from the “processes” perspective, the compliance rules make a lot more sense.

[cw]Reliance on point-in-time inspections can be misleading. My group (EHS) does this for all labs across campus. It is a good start- ensures the lab space is basically safe. But what is missed is what happens when people work in the lab (processes). In a past life, different industry, I worked with a group to develop best practices for oil spill response. If response organizations subscribed to the practices they had guidelines on how to implement response strategies. Not super prescriptive, but set some good guardrails. Might be useful here?

[cx]Experts in process safety are often soaked up by larger industries with much more predictable processes. The common sense questions they ask (what chemicals do you use?, who will be doing the work?) are met with blank stares in academia

[cy]I think this is a profound observation which leads to the success or failure of this kind of approach.

[cz]…this is also foreshadowing for some of her other papers on this case study :).

[da]Spooky!