All posts by Ralph Stuart

ACS Webinar: Navigating Questions About Reproductive Health When in the Lab

October 6, 2022 @ 2:00 PM EDT

A recent analysis of the current guidance from more than 100 academic institutions’ Chemical Hygiene Plans (CHPs) indicates that the burden to implement laboratory reproductive health and safety practices is often placed on those already pregnant or planning conception. This report also found inconsistencies in the classification of potential reproductive toxins by resources generally considered to be authoritative, adding further confusion.

The publication can be found in JACS at

A Call for Increased Focus on Reproductive Health within Lab Safety Culture

Catherine P. McGeough,† Sarah Jane Mear,† and Timothy F. Jamison*

https://pubs.acs.org/doi/10.1021/jacs.1c03725?ref=PDF

Other recent peer reviewed publications on the topic are

What to Expect When Expecting in Lab: A Review of Unique Risks and Resources for Pregnant Researchers in the Chemical Laboratory

https://pubs.acs.org/doi/10.1021/acs.chemrestox.1c00380?ref=PDF

Nature Comment on Pregnancy in the lab Feb 2022.pdf

https://www.nature.com/articles/s41570-022-00362-0

Presenters were Robin M. Izzo, Assistant Vice President of Environmental Health and Safety at Princeton University, Dr. Rich Wittman, Clinical Assistant Professor of Medicine at Stanford Health Care, and Dr. Katie McGeough, a Graduate Student at Boston College School of Social Work, as they discuss the findings reported in the Journal of American Chemical Society and provide both environmental health and safety and medical perspectives on risks to fertility, pregnancy, and other reproductive health concerns to all people working in the laboratory.

What You Will Learn

- Understand the current state of knowledge relative to the potential reproductive health impacts of laboratory work, including chemical, biological and physical concerns

- Identify questions that people considering pregnancy or currently pregnant should ask about their work in the laboratory

- How to find and evaluate literature resources related to reproductive health issues in the lab

Webinar Presentation

- The recording of this webinar is available to ACS members at http://www.acs.org/webinars

- You can download the presentation file here:

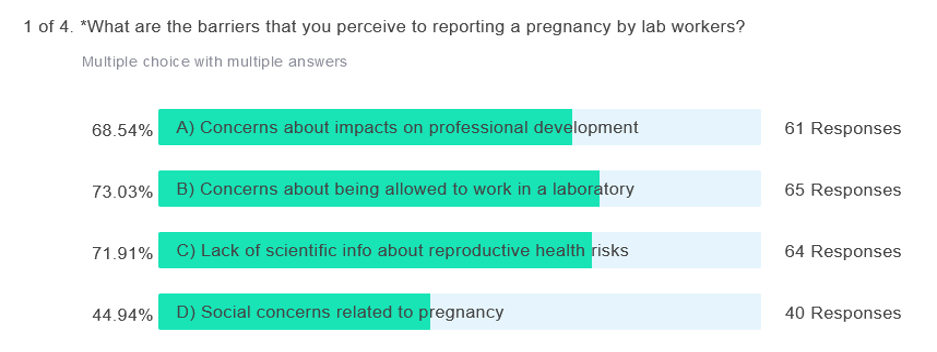

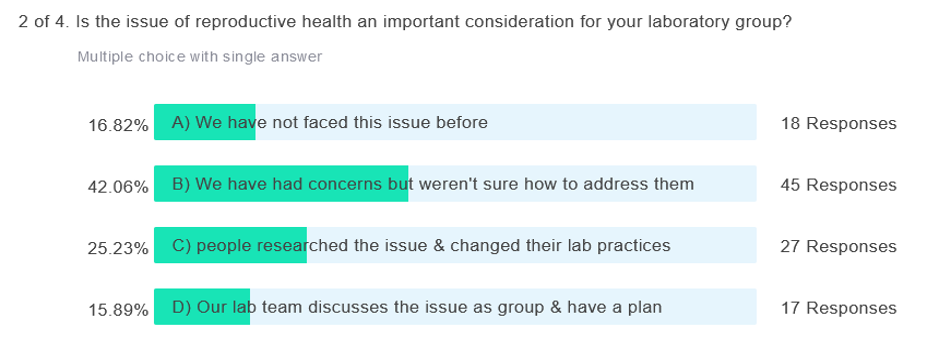

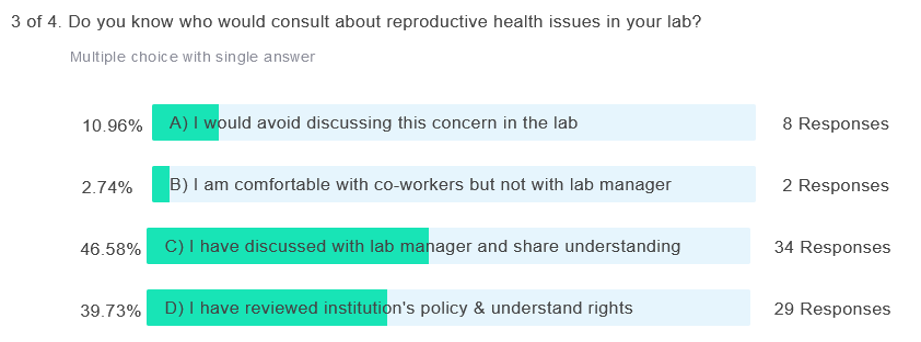

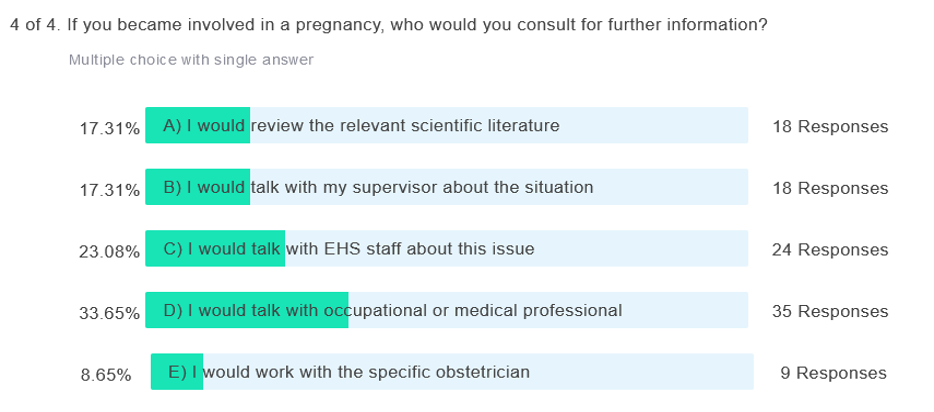

The audience poll responses were:

Indicators of Success in Laboratory Safety Cultures and General Papers

Over the past decade, there has been increasing interest in improving the safety culture of laboratory settings. How do we identify and implement indicators of success of these efforts? When are quantitative cultural measurements available and when do we need to rely on qualitative indicators of movement forward? Both theoretical ideas and concrete examples of the use of this approach are welcome in this symposium.

3745133 – Indicators of success in a safety culture, Ralph Stuart, CIH, CCHO, Presenter

3733703 – What’s in a name? Mapping the variability in lab safety representative positions, Sarah Zinn, Presenter; Imke Schroeder; Dr. Craig Merlic

3755577 – Factors for improving a laboratory safety coordinator (LSC) program, Kali Miller, Presenter

3754791 – Empowering student-led organizations to create effective safety policies, Angie Tse, Presenter

3738023 – Quantitative and qualitative indicators of safety culture evolution by the joint safety team, Demetra Adrahtas, Presenter; Polly Lynch; Sofia Ramirez; Brady Bresnahan; Taysir Bader

Division of Chemical Health & Safety General Papers

3752873 – Case studies and chemical safety improvements, Sandra Keyser, Presenter

3737640 – Storytelling is an art in building a “safety first” culture, Irene Cesa, Presenter; Dr. Kenneth P Fivizzani; Michael Koehler

3728705 – Vertical safety engagement through new community connections committee of the UMN joint safety team, Vilma Brandao, Presenter; Zoe Maxwell, Presenter; Jeffrey Buenaflor, Presenter; Gretchen Burke, Presenter; Steven Butler, Presenter; Xin Dong, Presenter; Nyema Harmon, Presenter; Erin Maines, Presenter; Taysir Bader; Brady Bresnahan

3752771 – Health and safety information integration: GHS 2021 version 9 in PubChem, Jian Zhang, Presenter; Evan Bolton

3754174 – Laboratory databases: Applications in safety programming, Magdalena Andrzejewska, Presenter

3748086 – Boundary Conditions- designing and operating laboratory access controls for safety, Joseph Pickel, Presenter

Safety Across the Chemical Disciplines and CHAS Awards Symposium

3741150 – Safety in the catalysis research lab, Mark Bachrach, Presenter

3754630 – Risk, safety, and troublesome territoriality: Bridging interdisciplinary divides, John G Palmer, PhD, Presenter; Brenda Palmer

3751492 – Risk-based safety education fosters sustainable chemistry education, Georgia Arbuckle-Keil, Presenter; David Finster; Ms. Samuella Sigmann, MS, CCHO; Weslene Tallmadge; Rachel Bocwinski; Marta Gmurczyk

02:00pm – 04:05pm CDT

Division of Chemical Health & Safety Awards Symposium

Brandon Chance, Organizer, Presider

3754798 – Interdepartmental initiatives to improving campus chemical safety, Luis Barthel Rosa, Presenter

3738511 – Building and sustaining a culture of safety via ground-up approaches, Quinton Bruch, Presenter

3750224 – Safety net: Lessons in sharing safe laboratory practices, Alexander Miller, Presenter

3740645 – Governing green labs: Assembling safety at the lab bench, Susan Silbey, Presenter.

Dr. Silbey’s presentation covered laboratory safety management ideas from her recent publications:

- Rank Has Its Privileges: Explaining Why Laboratory Safety Is a Persistent Challenge; Gokce Basbug, Ayn Cavicchi, Susan S. Silbey from Journal of Business Ethics 19 June 2022 https://doi.org/10.1007/s10551-022-05169-z

- Joelle Evans, Susan S. Silbey (2021) Co-Opting Regulation: Professional Control Through Discretionary Mobilization of Legal Prescriptions and Expert Knowledge. Organization Science in Articles in Advance 10 Dec 2021 . https://doi.org/10.1287/orsc.2021.1525

- To protect from lab leaks, we need ‘banal’ safety rules, not anti-terrorism measures; Ruthanne Huising and Susan S. Silbey Aug. 13, 2021 https://www.statnews.com/2021/08/13/banal-lab-safety-rules-keep-us-safe/

The Nomination Process for CHAS Awards

The Division of Chemical Health and Safety, Inc. of the American Chemical Society (CHAS), is currently soliciting nominations for several awards recognizing outstanding leadership and service in the area of chemical health and safety. The deadline for all 2023 award nominations is December 1, 2022. You can download the complete guidelines for CHAS Award Nominations here.

Information about each of the CHAS awards including nomination applications and a list of past recipients can be found at the links below:

The CHAS Graduate Student Safety Leadership Award is given to recognize a graduate student researcher or recent graduate (within 3 years of latest degree) who demonstrates outstanding leadership in the area of chemical health and safety in their laboratory, research group, or department. Each year the award is dedicated to a different historical figure in chemical safety. The award consists of $2000 as an honorarium and to support travel to the fall national meeting. An optional, additional $500 will be provided to support a new or ongoing project that promotes graduate student safety at the home school.

The Howard Fawcett Chemical Health and Safety Award recognizes outstanding individual contributions to the field of Chemical Health and Safety. The award consists of a commemorative plaque and a $500 prize for expenses so that the recipient can present at an award symposium at the fall ACS national meeting.

The Tillmanns-Skolnik Award was established in 1984 to recognize and honor outstanding, long-term service to the Division of Chemical Health and Safety. The award consists of a commemorative plaque and a $500 prize for expenses so that the recipient can present at an award symposium at the fall ACS national meeting.

The Laboratory Safety Institute Graduate Research Faculty Safety Award recognizes graduate-level academic research faculty who demonstrate outstanding commitment to chemical health and safety in their laboratories. The award consists of an engraved plaque and a $1,000 prize for expenses so that the recipient can present at an award symposium at the fall ACS national meeting.

The SafetyStratus College and University Health and Safety Award is given to recognize the most comprehensive laboratory safety program in higher education (undergraduate study only). The College and University award consists of a commemorative plaque and a $1000 prize for expenses so that the recipient can present at an award symposium at the fall ACS national meeting.

The CHAS Student Registration Award is given to encourage student participation in CHAS programming at ACS national meetings. The award provides reimbursement in the amount of full-conference registration fee (undergraduate, graduate, or pre-college teacher student rate, as applicable). Two student registration awards are given for each ACS national meeting.

Nominations are also solicited for special service and fellow awards:

The CHAS Lifetime Achievement Award recognizes a lifetime of dedication and service to the American Chemical Society, the ACS Division of Chemical Health and Safety, and the field of chemical health and safety. The awardee gives a 20 – 30 minute keynote presentation at the Awards Symposium at the fall ACS national meeting.

The Fellows Awards recognize CHAS members in good standing who have provided continuous service. Nominees who meet the criteria will receive a certificate.

A Service Award is given to the immediate past division Chair at the end of their term. The chair receives an ACS past chair’s pin and plaque.

Please contact the CHAS Awards Committee Chair, Brandon Chance for more information. Mr. Chance can be reached at 214-768-2430 or awards@dchas.org

EHS Leadership and Diversity

Remember: No matter where you go, there you are. Samuella Sigmann

9:10am-9:35am What is your safety role? An introduction to structured safety programs. Mary Heuges

9:35am-10:00am Safety leadership and organizational design Mary Koza

10:35am-11:00am Workplace safety needs diversity to endure that everyone is safe Frankie Wood-Black

11:00am-11:25am Improving researcher safety: Activities of the University of California center for laboratory safety Imke Schroeder

11:25am-11:50am Circadian rhythms based safety for managing the risks of human factor manifestation. Amir Kuat

Service Awards

Statement of Award Purpose

Service Awards are given to the immediate past Division Chair at the end of their term. The chair receives an ACS past chair’s pin and plaque.

Award Amount and Recognition

An ACS Past Chair’s pin and an appropriate plaque of appreciation to be presented at an Awards Symposium during the fall ACS national meeting following the chair’s term.

CHAS Fellows Award

Download the Nomination Application Form for this Award Here: [Click to Download CHAS Fellows Nomination Form]

Statement of Award Purpose

Fellows Awards recognize CHAS members in good standing who have provided continuous, active service to CHAS and who have made significant contributions to the Division during their active service.

Award Recognition

Certificate and lapel pin presented at the Awards Symposium during the Fall ACS national meeting.

Description of Eligible Nominees

An eligible nominee must be current members of the division who has been a member for at least fifteen years or has been a member for at least seven years (continuous membership) and has been active in CHAS activities for at least three years. Active membership is defined below.

CHAS Fellows have shown support for the goals and activities of CHAS, and has through personal effort, helped CHAS achieve those goals. The following activities designate a member as “active”:

- Division volunteer service including holding office in the division, chairing division committees, etc.

- Organization of symposia, major presentations, or other programming at national/international meetings

- Contributions to safety in ACS Publications

- Leadership of or other contributions to Division outreach activities

There is no limit to the number of CHAS Fellows named each year.

Eligible Sources of Nominations

- Self-nomination

- Any CHAS division member

Roster of CHAS Fellows

(Those listed in bold are also ACS Fellows)

|

|

CHAS Graduate Student Safety Leadership Award

Download the Nomination Application Form for this Award Here: [Click to Download GRADUATE STUDENT SAFETY LEADERSHIP AWARD in Word format]

Statement of Award Purpose

This award is given to recognize a graduate student researcher or recent graduate (within 3 years of latest degree) who demonstrates outstanding leadership in the area of chemical health and safety in their laboratory, research group, or department.

Each year the award is dedicated to a different historical figure in chemical safety.

Award Amount and Recognition

Award Amount and Recognition

This award is made possible by the generosity of an anonymous donor.

The award consists of:

- A $500 honorarium will be payable directly to the award recipient

- A certificate that includes information about that year’s dedicatee

- An invitation to deliver a 15 – 20-minute presentation at the CHAS Awards Symposium. The presentation should describe the work recognized by the award.

- An optional additional $2,000 will be made available to support a project that promotes graduate student safety at the home school and/or for travel expenses to the CHAS Awards Symposium at an ACS national meeting, as applicable

This award is made possible by the generosity of an anonymous donor.

The recipient of this award is expected to deliver a 15 – 20-minute presentation at the CHAS Awards Symposium at the ACS Fall national meeting in the year that they receive the award. The presentation should describe the work recognized by the award.

Description of Eligible Nominees

Eligible nominees are current master’s or Ph.D. candidates in research fields or those who have graduated within the past three years who demonstrate values and behaviors consistent with the criteria of this award. The researcher may be a member of any academic department at their institution provided that chemical use is a significant part of their research. Non-ACS and CHAS members are eligible for this award.

Award Criteria

The primary criterion for this award is demonstrated leadership of specific project(s) that support a proactive safety culture in the laboratory, research group, and/or department where the student’s research or teaching responsibilities take place. Such projects empower their peers and students to address technical and cultural safety concerns related to chemical usage, in either the teaching or research environment.

Examples of work that calls on other safety leadership skills (e.g. participation in extramural safety conferences and organizations, publication of safety related information in research papers, development of new approaches to safety education in the lab) will support the award application, but not replace the need for a specific example of project-based leadership.

Required support for nomination

- Cover letter from the nominator describing why the nominee is deserving of this award.

- Two letter(s) of support from institution’s Environmental Health and Safety Office, Senior Administration or Departmental leadership.

- Descriptions of or samples of research safety projects or initiatives lead by nominee

Eligible Sources of Nominations

- Self-nomination

- Teammate (fellow student or lab member)

- Department chair

- EH&S Department

- Vice Provost for Research or another Senior Administrator

- Peer

Additional Information about this Award

This award was proposed in 2020 by an anonymous donor and was developed by the CHAS Awards committee in collaboration with the donor. The award was first given in 2021.

Previous Awardees:

2022, given in honor of the Radium Girls

Quinton Bruch, University of North Carolina at Chapel Hill

2021, given in honor of Sheharbano “Sheri” Sangji

- Graduate Student Team Leaders:

- Jessica De Young, University of Iowa

- Alex Leon Ruiz, University of California, Los Angeles

- Sarah Zinn,University of Chicago

- Cristan Aviles-Martin, University of Connecticut

SafetyStratus College and University Health and Safety Award

Download the Nomination Application Form for this Award Here: [Click to Download SafetyStratus College and University Nomination Form in Word format]

Statement of Award Purpose

This award is given to recognize the most comprehensive chemical safety programs in higher education (undergraduate study only).

Award Amount and Recognition

- $1,000 Honorarium

- Engraved plaque including name of recipient and sponsor logo to be presented to winner at award symposium

This award is made possible by the generosity of SafetyStratus.

The recipient of this award is expected to deliver a 15 – 20-minute presentation at the CHAS Awards Symposium at the ACS Fall national meeting in the year that they receive the award. The presentation may be on any topic related to chemical safety.

Description of Eligible Nominees

The nominee may be a college/university chemistry academic department, an entire campus, or an EH&S office. Joint-nominations including chemistry departments and other offices will also be accepted. Preference will be given to those submissions which include participation from the chemistry department.

Previous recipients of this award will only be considered eligible again 10 years after they receive the award. Their current undergraduate lab safety program must differ significantly from the program as it was when the award was previously received. In this case, supporting documentation must highlight the program improvements since the year that the award was last received.

Detailed award criteria are given below.

One award is given per year.

Award Criteria

1. Chemistry Department’s safety policy statement

2. Chemical Hygiene Plan(s) for instructional laboratories

3. Evidence that safety concepts, such as risk assessment and utilization of chemical-safety information, are included in the teaching curriculum.

Evidence may include the following:

- Safety rules for students

- Course syllabus showing covered safety topics

- Laboratory manuals with safety guidance

- Examinations or exercises used to teach or reinforce safety concepts

- Safety course offerings

- Description(s) of offered seminar(s) on safety topics

- Results of safety research

- Other examples as appropriate

4. Description of, or documentation for, chemical waste collection policies and procedures for instructional courses and prep lab

5. Chemical Storage policies

This may include descriptions of:

- Access control

- Segregation

- Protected storage

- Inventory tracking methods

- Storage quantity limitations, approvals for ordering new chemicals, etc.

6. Policies relating to the prep-lab space (if applicable), as well as written safety requirements for instructors and teaching assistants working during non-class times.

This may include:

- Separate Chemical Hygiene Plan for prep lab (or clear inclusion in the department CHP)

- General policy and procedures, for use of and access to the space (e.g. restricted access, policy for not working alone in the lab, etc.)

7. Evidence of waste-minimization and green-chemistry strategies.

This may include:

- Policies for waste minimization or a list of prohibited materials

- Description of green practices used in the instructional labs

- Incorporation of sustainability concepts into the curriculum

8. Evidence of faculty and teaching-assistant safety training and development.

This may include:

- Description of safety training requirements and policies for individuals overseeing, or directly supervising, laboratory classes

- Learning objectives for the safety training that describe the skills or knowledge that the faculty and teaching assistants will acquire

- Copy of safety training materials for training instructors and T.A.s, if applicable

- Seminars, workshops, production of videotapes, slides, etc.

9. Lab Safety Event (incidents and near miss) reporting.

Documentation may include:

- Policy for reporting incidents

- Summary reports and analysis of incidents, injuries, and near misses from the previous 3 years

- Summary of lessons learned from events and corrective actions taken

- Example reports with root cause analysis and corrective actions

10. Policy, procedure, and frequency for routine assessments of laboratory’s physical condition (i.e. audits/inspections). Assessments may be internal (self-inspection) or external (e.g. EH&S department inspections)

Supporting documentation may include:

- Description of inspection process, frequency, and responsible parties

- Copy of inspection checklist

- Metrics or data demonstrating program effectiveness. This could include data regarding frequency of inspections, average number of findings, median time for corrective action, etc.

Required support for nomination

The nomination must include a cover letter from the nominator describing why the nominee is deserving of this award. The letter shall describe the nominee’s work and how it is aligned with the purpose, eligibility, and/or criteria of the award.

Other supporting materials are suggested in the details of the award criteria. The supporting information may be provided as links to applicable webpages on the organization’s website or as attached files. The documents and/or links must be labeled/named using the numbering and descriptions provided in the details of the award criteria. The name of the institution must be included in all file names.

The nomination may also include a letter of support from the institution’s Environmental Health and Safety office, as well as, a letter of support from a senior administrator such as the head of the academic department, the vice provost for research, or the dean of the school; although this is not a requirement for this award.

Site visit criteria (if applicable)

If a nominee is eligible based on supporting documentation, a committee member or other ACS delegate will perform a site visit to verify.

As part of the site visit, the following laboratory and chemical-use area conditions of the undergraduate laboratory facility may be assessed:

- General ventilation

- Engineering controls in working order

- Housekeeping and general facility condition including chemical storage areas

- Adequate student supervision

- Security of chemical storage areas and general lab spaces

- Emergency irrigation tested and working

- Emergency response equipment and supplies stocked and inspected (spill kits, fire extinguishers, etc.)

- Personal protective equipment available and in good condition

- Posted emergency procedures and contact numbers

Eligible Sources of Nominations

- Self-nomination

- Subordinate (Student)

- Department chair

- EH&S Department

- Vice Provost for Research or another Senior Administrator

- Peer or another ACS member who is familiar with the nominee’s undergraduate program

Previous Winners

2022: University of Nevada, Reno, Environmental Health and Safety Department & Department of Chemistry

2021: C. Eugene Bennett Department of Chemistry, West Virginia University

2020: Massachusetts Institute of Technology Department of Chemistry Undergraduate Teaching Laboratory and Environment, Health & Safety Office

2019: University of Pittsburgh, Department of Chemistry and Department of EH&S

2018: University of North Carolina at Chapel Hill, Department of Chemistry and Department of Environment, Health and Safety

2017: Department of Chemistry and the Department of Environmental Health and Safety, Stanford University

2016: Duke University

2015: University of Pennsylvania

2014: University of California Davis

2013: North Carolina State University

2012: Wittenburg University, Springfield, Ohio

2011: University of California, San Diego

2010: Princeton University

2009: Wellesley College

2008: Franklin and Marshall

2007: University of Connecticut

2006: none awarded

2005: Massachusetts Institute of Technology and the University of Nevada-Reno

2004: University of Massachusetts-Boston

2003: none awarded

2002: none awarded

2001: West Virginia University

2000: none awarded

1999: Francis Marion University

1996: Williams College

1995: University of Wisconsin-Madison

1993: College of St. Benedict, jointly with St. John’s University of St. Joseph, MN

1991:Massachusetts Institute of Technology