04/14/22 Table Read for The Art & State of Safety Journal Club

Excerpts and comments from “A Transferable Psychological Evaluation of Virtual Reality Applied to Safety Training in Chemical Manufacturing”

Published as part of the ACS Chemical Health & Safety joint virtual special issue “Process Safety from Bench to Pilot to Plant” in collaboration with Organic Process Research & Development and Journal of Loss Prevention in the Process Industries.

Matthieu Poyade, Claire Eaglesham,§ Jordan Trench,§ and Marc Reid*

The full paper can be found here: https://pubs.acs.org/doi/abs/10.1021/acs.chas.0c00105

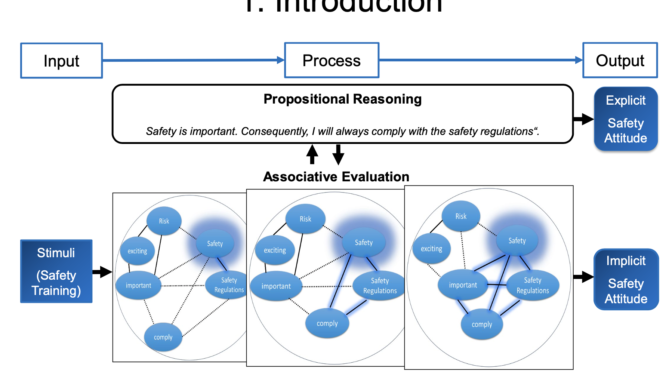

1. Introduction

Safety in Chemical Manufacturing

Recent high-profile accidents—on both research and manufacturing scales—have provided strong drivers for culture change and training improvements. While our focus here is on process-scale chemical manufacturing,[a][b][c][d][e][f][g][h][i][j] the similarly severe safety challenges exist on the laboratory scale; such dangers have been extensively reviewed recently. Through consideration of the emerging digitalization trends and perennial safety challenges in the chemical sector, we envisaged using interactive and immersive virtual reality (VR) as an opportune technology for developing safety training and accident readiness for those working in dangerous chemical environments.

Virtual Reality

VR enables interactive and immersive real-time task simulations across a growing wealth of areas. In higher education, prelab training in[k][l][m] VR has the potential to address these issues giving students multiple attempts to complete core protocols virtually in advance of experimental work, creating the time and space to practice outside of the physical laboratory.

Safety Educational Challenges

Safety education and research have evolved with technology, moving from videos on cassettes to online formats and simulations. However, the area is still a challenge, and very recent work has demonstrated that there must be an active link between pre-laboratory work and laboratory work in order for the advance work to have impact.

Study Aims

The primary question for this study can be framed as follows: When evaluated on a controlled basis, how do two distinct training methods[n][o][p][q][r][s], (1) VR training and (2) traditional slide training[t][u][v] (displayed as a video to ensure the consistency of the provision of training), compare for the same safety-critical task?

We describe herein the digital recreation of a hazardous facility using VR to provide immersive and proactive safety training. We use this case to deliver a thorough statistical assessment of the psychological principles of our VR safety training platform versus the traditional non-immersive training (the latter still being the de facto standard for such live industrial settings).

Figure 3. Summarized workflow for safety training case identification and comparative assessment of PowerPoint video versus VR.

2. Methods

After completing their training, participants were required to fill in standardized questionnaires which aimed to formally assess 5 measures of their training experiences.

1. Task-Specific Learning Effect

Participants’ post-training knowledge of the ammonia offload task was assessed in an exam-style test composed of six task-specific open-questions.

2. Perception of Learning Confidence

How well participants perform on a training exam and how they feel about the overall learning experience are not the same thing. Participant experiences were assessed through 8 bespoke statements, which were specifically designed for the assessment of both training conditions.

3. Sense of Perceived Presence

“Presence” can be defined as the depth of a user’s imagined sensation of “being there” inside the training media they are interacting with.

4. Usability

From the field of human–computer interaction, the System Usability Scale (SUS) has become the industry standard for the assessment of system performance and fitness for the intended purpose… A user answers their level of agreement on a Likert scale, resulting in a score out of 100 that can be converted to a grade A–F. In our study, the SUS was used to evaluate the subjective usability of our VR training system.

5. Sentiment Analysis

Transcripts of participant feedback—from both the VR and non-VR safety training groups—were used with the Linguistic Inquiry and Word Count (LIWC, pronounced “Luke”) program. Therein, the unedited and word-ordered text structure (the corpus) was analyzed against the LIWC default dictionary, outputting a percentage of words fitting psychological descriptors. Most importantly for this study, the percentage of words labeled with positive or negative affect (or emotion) were captured to enable quantifiable comparison between the VR and non-VR feedback transcripts.

3. Results

Safety Training Evaluation

Having created a bespoke VR safety training platform for the GSK ammonia offloading task, the value of this modern training approach could be formally assessed versus GSK’s existing training protocols. Crucial to this assessment was the bringing together of experimental methods which focus on psychological principles that are not yet commonly practiced in chemical health and safety training assessment (Figure 3). All results presented below are summarized in Figure 8 and Table 1.

Figure 8. Summary of the psychological assessment of VR versus non-VR (video slide-based) safety training. (a) Task-specific learning effect. (b) Perception of learning confidence. (c) Assessment of training presence. (d) VR system usability score. In parts b and c, * and ** represent statistically significant results with p < 0.05 and p < 0.001, respectively.

1. Task-Specific Learning Effect (Figure 8a)

Task-specific learning for the ammonia offload task was assessed using a questionnaire[z][aa][ab][ac] built upon official GSK training materials and marking schemes. Overall, test scores from the Control group and the VR group showed no statistical difference between groups. However, there was tighter distribution[ad] around the mean score for the VR group versus the Control group.

2. Perception of Learning Confidence (Figure 8b)

Participants’ perceived confidence of having gained new knowledge was assessed using a questionnaire composed of 8 statements, probing multiple aspects of the learning experience… Within a 95% confidence limit, the VR training method was perceived by participants to be significantly more fit for training purpose than video slides. VR also gave participants confidence that they could next perform the safety task alone[ae][af][ag]. Moreover, participants rated VR as having more potential than traditional slides for helping train in other complex tasks and to improve decision making skills (Figure 8b). Overall, participants from the VR group felt more confident and prepared for on-site training than those from the Control group.[ah]

3. Sense of Perceived Presence (Figure 8c)

The Sense of Presence questionnaire was used to gauge participants’ overall feeling of training involvement across four key dimensions. Results show that participants from the VR group reported experiencing a higher sense of presence than those from the Control group. On the fourth dimension, negative effects, participants from the Control group reported experiencing more negative effects than those from the VR group, but the result was not statistically different (Figure 8c). [ai]

4. Usability of the VR Training Platform (Figure 8d)

The System Usability Scale (SUS) was used to assess the effectiveness, intuitiveness, and satisfaction with which participants were able to achieve the task objectives within the VR environment. The average SUS score recorded was 79.559 (∼80, or grade A−), which placed our VR training platform on the edge of the top 10% of SUS scores (see Figure 5 for context). The SUS result indicated an overall excellent experience for participants in the VR group.

[Participants also] disagreed with any notion that the VR experience was too long (1.6 ± 0.7) and did not think it was too short (2.5 ± 1.1). Participants agreed that the simulation was stable and smooth (3.9 ± 1.2) and disagreed that it was in any way jaggy (2.3 ± 0.8). Hand-based interactions with the VR environment were agreed to be relatively intuitive (3.8 ± 1.3), and the head-mounted display was found to provide agreeable comfort for the duration of the training (4.0 ± 0.9).

5. Sentiment Analysis of Participant Feedback (Table 1)

In the final part of our study, we aimed to corroborate the formal statistical analysis against a quantitative analysis of open participant feedback. Using the text-based transcripts from both the Control and VR group participant feedback, the Linguistic Inquiry and Word Count (LIWC) tool provided further insight based on the emotional sentiment hidden in the plain text. VR participants were found to use more positively emotive words (4.2% of the VR training feedback corpus) versus the Control group (2.1% of the video training feedback corpus). More broadly, the VR group displayed a more positive emotive tone and used fewer negatively emotive words than the Control group.

Table 1. LIWC-Enabled Sentiment Analysis of Participant Feedback Transcripts

|

LIWC variable |

brief description |

VR-group |

non-VR group |

|

word count |

no. of words in the transcript |

1493 |

984 |

|

emotional tone |

difference between positive and negative words (<50 = negative) |

83.1 |

24.2 |

|

positive emotion |

% positive words (e.g., love, nice, sweet) |

4.2% |

2.1% |

|

negative emotion |

% negative words (e.g., hurt, nasty, ugly) |

1.1% |

2.2% |

4. Discussion

Overall, using our transferable assessment workflow, the statistical survey analysis showed that task-specific learning was equivalent[aj][ak][al][am][an] for VR and non-VR groups. This suggests that the VR training in that specific context is not detrimental to learning and appears to be as effective as the traditional training modality but, crucially, with improved user investment in the training experience. However, the distribution difference between both training modalities suggests that the VR training provided a more consistent experience across participants than watching video slides, but more evaluation would be required to verify this.

In addition, perceived learning confidence and sense of perceived presence were reported to be all significantly better in VR over the non-VR group. The reported differences in perceived learning confidence between participants from both groups suggest that those from the VR group, despite having acquired a similar amount of knowledge, were feeling more assured about the applicability of that knowledge. These findings thus suggest that the VR training resulted in a more engaging and psychologically involving modality able to increase participants’ confidence in their own learning. [ao][ap][aq]Further research will also aim to explore the applicability and validation of the perceived learning confidence questionnaire introduced in this investigation.

Additionally, VR system usability was quantifiably excellent, according to the SUS score and feedback text sentiment analysis.

Although our experimental data demonstrate the value of the VR modality for health and safety training in chemical manufacturing settings, the sampling, and more particularly the variation in digital literacy[ar] among participants, may be a limitation to the study. Therefore, future research should explore the training validity of the proposed approach involving a homogeneously digitally literate cohort of participants to more rigorously measure knowledge development between experimental conditions.

4.1. Implications for Chemical Health and Safety Training

By requiring learners to complete core protocols virtually in advance of real work, VR pretask training has the potential to address issues of complex learning, knowledge retention[as][at][au], training turnover times, and safety culture enhancements. Researchers in the Chemical and Petrochemical Sciences operate across an expansive range of sites, from small laboratories to pilot plants and refineries. Therefore, beyond valuable safety simulations and training exercises, outputs from this work are envisaged to breed applications where remote virtual or augmented assistance can alleviate the significant risks to staff on large-scale manufacturing sites.

4.2. Optimizing Resource-Intensive Laboratory Spaces

As a space category in buildings, chemistry laboratories are significantly more resource-intensive than office or storage spaces. The ability to deliver virtual chemical safety training, as demonstrated herein, could serve toward the consolidation and recategorization, minimizing utility and space expenditure threatening sustainability.[av][aw][ax][ay][az][ba][bb] By developing the new Chemistry VR laboratories, high utility bills[bc][bd][be][bf] associated with running physical chemistry laboratories could potentially be significantly reduced.

4.3. Bridging Chemistry and Psychology

By bringing together psychological and computational assessments of safety training, the workflow applied herein could serve as a blueprint for future developments in this emerging multidisciplinary research domain. Indeed, the need to bring together chemical and psychological skill sets was highlighted in the aforementioned safety review by Trant and Menard.

5. Conclusions

Toward a higher standard of safety training and culture, we have described the end-to-end development of a VR safety training platform deployed in a dangerous chemical manufacturing environment. Using a specific process chemical case study, we have introduced a transferable workflow for the psychological assessment of an advanced training tool versus traditional slide-based safety training. This same workflow could conceivably be applied to training developments beyond safety.

Comparing our VR safety training versus GSK’s established training protocols, we found no statistical difference in the task-specific learning[bg] achieved in VR versus traditional slide-based training. However, statistical differences, in favor of VR, were found for participants’ positive perception of learning confidence and in their training presence (or involvement) in what was being taught. In sum, VR training was shown to help participants invest more in their safety training than in a more traditional setting for training[bh][bi][bj][bk][bl].

Specific to the VR platform itself, the standard System Usability Scale (SUS) found that our development ranked as “A–” or 80%, placing it toward an “excellent” rating and well within the level of acceptance to deliver competent training.

Our ongoing research in this space is now extending into related chemical safety application domains.

[a]I would think the expense of VR could be seen as more “worth it” at this scale rather than at the lab scale given how much bigger and scarier emergencies can be (and how you really can’t “recreate” such an emergency in real life without some serious problems).

[b]Additionally, I suspect that in manufacturing there is more incentive to train up workers outside of the lecture/textbook approach. Many people are hands on learners and tend to move into the trades for that reason.

[c]I was also just about to make a comment that the budget for training and purchasing and upkeep for VR equipment is probably more negligible in those environments compared to smaller lab groups

[d]Jessica…You can create very realistic large scale simulations. For example, I have simulated 50-100 barrel oil spills on water, in rivers, ponds and lakes, with really good results.

[e]Oh – this is a good point. What is not taken into account here is the comfort level people have with different types of learning. It would be interesting to know if Ph.D. level scientists and those who moved into this work through apprenticeship and/or just a BA would’ve felt differently about these training experiences.

[f]Neal – I wasn’t questioning that. I was saying that those things are difficult (or impossible) to recreate in real life – which is why being able to do a simulation would be more attractive for process scale than for lab scale.

[g]The skill level of the participants in not known. A pilot plant team spans very skilled technicians to PhD level engineers and other scientists. I do not buy into Ralph’s observation.

[h]Jessica, I disagree. Simulated or hands-on is really valuable for learning skills that require both conceptual understanding and muscle memory tasks.

[i]I’m not disagreeing. What I am saying is that if you want to teach someone how to clean up a spill, it is a lot easier to spill 50 mL of something in reality and have them practice leaning it up, than it is to spill 5000 L of something and ask them to practice cleaning it up. Ergo, simulation is going to automatically be more attractive to those who have to deal with much larger quantities.

[j]And the question wasn’t about skill level. It was about comfort with different sorts of learning. You can be incredibly highly skilled and still prefer to learn something hands-on – or prefer for someone to give you a book to read. The educational levels were somewhat being used as proxies for this (i.e. to get a PhD, you better like learning through reading!).

[k]Videos

The following YouTube links provide representative examples of:

i. The ammonia offload training introduction video; https://youtu.be/30SbytSHbrU

ii. The VR training walkthrough; https://youtu.be/DlXu0nTMCPQ

iii. The GSK ammonia tank farm fly through (i.e. the digital twin used in the VR training;

iv. The video slide training video; https://youtu.be/TZxJDJXVPgM

[l]Awesome – thank you for adding this.

[m]Very helpful. Thank you

[n]I’d be curious to see how a case 3 of video lecture then VR training compares, because this would have a focused educational component then focused skill and habit development component

[o]I’d be interested to see how in person training would compare to these.

[p]This would also open up a can of worms. It is in-person interactive learning? Is it in-person hands-on learning? Or is it sitting in a classroom watching someone give a Powerpoint in-person learning?

[q]I was imagining hands-on learning or the reality version of the VR training they did so they could inspect how immersive the training is compared to the real thing. Comparision to an interactive train could also have been interesting.

[r]I was about to add that I feel like comparison to interactive in-person training would’ve been good to see. I tend to think of VR as same as an in-person training but just digital.

[s]Thats why I think it could be interesting. They could see if there is in fact a difference between VR and hands-on training. Then if there was none you would have an argument for a cost saving in space and personnel.

[t]Comparing these two very different methods is problematic. If you are trying to assess the added immersive value of VR then it needed to be compared to another ‘active learning’ method such as computer simulation.

[u]Wouldn’t VR training essentially be a form of computer simulation? I was thinking that the things being compared were 2 trainings that the employees could do “on their own”. At the moment, the training done on their own is typically some sort of recorded slideshow. So they are comparing to something more interactive that is also something that the employee can do “on their own.”

[v]A good comparison could have been VR with the headset and controllers in each hand compared to a keyboard and mouse simulation where you can select certain options. More like Oregon Trail.

[w]I’ve never seen this graphic before, but I love it! Excel is powerful but user-hostile, particularly if you try to share your work with someone else.

Of course, Google and Amazon are cash cows for their platforms, so they have an incentive to optimize System Usability (partially to camouflage their commercial interest in what they are selling).

[x]I found this comparison odd. It is claiming to measure a computer system’s fitness for purpose. Amazon’s purpose for the user is to get people to buy stuff. While it may be complex beyond the scenes, it has a very simple and pretty singular purpose. Excel’s purpose for the user is to be able to do loads of different and varied complex tasks. They are really different animals.

[y]Yes, I agree that they are different animals. However understanding the limits of the two applications requires more IT education than most people get.(Faculty routinely comment that “this generation of students doesn’t know how to use computers”.) As a result, Excel is commonly used for tasks that it is not appropriate for. But you’re correct that Amazon and Google’s missions are much simpler than those Excel are used for.

[z]I’d be very interested to know what would have happened if the learners were asked to perform the offload task.

[aa]Yes! I just saw your comment below. I wonder if they are seeing no difference here because they are testing on different platform than they trained. Would be interesting to compare written and in practice results for each group. Maybe the VR group would be worse at the written test but better at physically doing the tasks.

[ab]This could get at my concerns about the Dunning-Kruger effect that I mentioned below. as well Just because someone feels more confident that they can do something, doesn’t mean that they are correct about that. It definitely would’ve been helpful to actually have the employees perform the task and see how the two groups compared. Especially since the purpose of training isn’t to pass a test – it is to actually be able to DO the task!

[ac]“Especially since the purpose of training isn’t to pass a test – it is to actually be able to DO the task!” – this

[ad]If I’m understanding the plot correctly, it looks like there is a higher skew in the positive direction for the control group which is interesting. I.e. lower but also higher scores. It seems to have an evening effect which makes the results of the training more predictable.

[ae]I’m slightly alarmed that VR give people the confidence to perform the tasks alone when there was no statistical difference in the task-specific learning scores than the control group. VR seems to give a false sense of confidence.

[af]I had the same reaction to reading this. I don’t believe a false sense of confidence in performing a task alone to be a good thing. Confidence in your understanding is great, but overconfidence in physically performing something can definitely lead to more accidents.

[ag]A comment I’ve seen is that the VR trainees may perform better in doing the action than the control but they only gauged their knowledge in an exam style, not in actually performing the task they are trained to do. But regardless, a false confidence would not be good.

[ah]Wonder if there is concern that this creates a false confidence given the exam scores.

[ai]Wow these are big differences. There is basically no overlap in the error bars except the negative effect dimension.

[aj]If the task-specific learning was equivalent, but one method caused people to express greater confidence than the other, is this necessarily a good thing? Taken to an extreme perhaps, wouldn’t we just be triggering the Dunning-Kruger effect?

[ak]I’d be interested in the value of VR option as refresher training, similar to the way that flight simulators are used for pilots. Sullenberger attributed surviving the bird strike at LaGuardia to lots of simulator time allowing him to react functionally rather than emotionally in the 30 seconds after birds were hit

[al]I had the same comment above. I was wondering if people should be worried about false confidence. But the problem with the assessment was on paper, they may be better at physically doing the tasks or responding in real time, that was not tested.

[am]Exactly, Kali!

[an]From Ralph’s comment, I think there is a lot of value in VR for training for and simulating emergency situations without any of the risks.

[ao]Again, this triggers the question: should they be more confident in this learning?

[ap]I don’t think the available data let us distinguish between an overconfident test group and an under-confident control group (or both).

[aq]I am curious what the standard in the field is for determining at what point learners or over confident. For example, are these good scores? Is there a correlation between higher test scores and confidence or inverse meaning false confidence?

[ar]I am curious as well how much training there needed to be to explain how the VR setup worked. That is a huge factor to how easy people will perceive the VR setup to be if someone walks them through it slowly, versus walking up to a training station and being expected to know how to use it.

[as]I suspect that if people are more engaged in the VR training that they would have better retention of the training over time and would be interesting to explore. If so, that would be a great argument for VR.

[at]Right and if places don’t have the capacity to offer hands on training, an alternative to online-only is VR. Or, in high hazard work where it’s not advisable to train under the circumstances.

[au]I argee that their is a lot of merit for VR in high hazard or emergnecy response simulation and training.

[av]I’m personally more interested in the potential to use augmented reality in an empty lab space to train researchers to work with a variety of hazards/operations.

[aw]Right, this whole time I wasn’t thinking about replacing labs I was thinking about adding a desk space for training. I am still curious about the logistics though, in the era of COVID if people will really be comfortable sharing equipment and how it will be maintained, because that all costs money as well.

[ax]This really would open up some interesting arguments. Would learning how to do everything for 4 years in college by VR actually translate to an individual being able to walk into a real lab and work confidently?

[ay]From COVID, some portion of the labs they were able to do virtually – like moving this etc. From personal standpoint, I’d say no and like we talked about here – it would give a false sense of confidence which could be detrimental. It’s the same way I feel about “virtual fire extinguisher training.” I don’t think it provides any of the necessary exposure.

[az]Amanda totally agree. The virtual fire extinguisher training does not provide the feel (heat) smell (fire & agent) or sound or a live fire exercise.

[ba]Oh wait – virtual training and “virtual reality training” are pretty different concepts. I agree that virtual training would never be able to substitute for hands-on experience. However, what VR training has been driving at for years is to try to create such a realistic environment within which to perform the training that it really does “feel like you are there.” I’m not sure how close we are to that. In my experiences with VR, I haven’t seen anything THAT good. But I likely haven’t been exposed to the real cutting edge.

[bb]Jessica, I wouldn’t recommend that AR/VR ever be used as the only training provided, but I suspect that it could shorten someone’s learning curve.

Amanda and Neal, having done both, I think that both have their advantages. The heat of the fire and kickback from the extinguisher aren’t well-replicated, but I actually think that the virtual extinguisher can do a better job of simulating the difficulty. When I’ve done the hands-on training, you’d succeed in about 1-2 seconds as long as you pointed the extinguisher in the general vicinity of the fire.

[bc]Utility bills for lab spaces are higher than average, but a small fraction of the total costs of labs. At Cornell, energy costs were about 5% of the cost of operating the building when you took the salaries of the people in the building into account. This explains why utility costs are not as compelling to academic administrators as they might seem. However, if there are labor cost savings associated with VR…

[bd]I’d love to see a breakdown of the fixed cost of purchasing the VR equipment, and the incremental cost of developing each new module.

[be]Ralph, that is so interesting to see the numbers I had no idea it was that small of an amount. I suppose the argument might need to be wasted energy/ environmental considerations then, rather than cost savings.

[bf]Yes, that is why I had a job at Cornell – a large part of the campus community was very committed to moving toward carbon neutrality and support energy conservation projects even with long payback periods (e.g. 7 years)

[bg]It seems like it would be more helpful to have a paper-test and field-test to see if VR helped with physically doing the tasks, since that is the benefit that I see. In addition, looking at retention over time would be important. Otherwise the case is harder to make for VR if it’s not increasing the desired knowledge and skills

[bh]How much of the statistical favoring of VR was due to the VR platform being “cool” and “new” versus the participants familiarity with traditional approaches? I do not see any control in the document for this.

[bi]I agree that work as reported seems to be demonstrating that the platform provides an acceptable experience for the learner, but it’s not clear whether the skills are acquired or retained.

[bj]three of the 4 authors are in the VR academic environment; one is in a chemistry department. Seems to be real bias in showing that VR works in this.

[bk]One application I think this could help with is one I experienced today. I had to sit through an Underground Storage Tank training which was similar to the text-heavy powerpoint in the video. I had been able to self direct my tour of the tank and play with the valves and detection systems, I would have been able to complete the regulatory requirements of understanding the system well enough to oversee its operations, but not well enough to have hands-on responsibilities. The hands-on work is left the contractors who work on the tanks everyday.

[bl]We have very good results using simulators in the nuclear power and airflight industries. BUT, there is still significant learning that occurs when things become real. The most dramatic example is landing high performance aircraft on carrier decks. Every pilot agrees that nothing prepares them for the reality this controlled crash.